Computer-science_A-level_Cie

-

computers-and-components6 主题

-

logic-gates-and-logic-circuits2 主题

-

central-processing-unit-cpu-architecture6 主题

-

assembly-language-4 主题

-

bit-manipulation1 主题

-

operating-systems3 主题

-

language-translators2 主题

-

data-security3 主题

-

data-integrity1 主题

-

ethics-and-ownership3 主题

-

database-concepts3 主题

-

database-management-systems-dbms-1 主题

-

data-definition-language-ddl-and-data-manipulation-language-dml1 主题

-

computational-thinking-skills1 主题

-

algorithms14 主题

-

data-types-and-records2 主题

-

arrays2 主题

-

files1 主题

-

introduction-to-abstract-data-types-adt1 主题

-

programming-basics1 主题

-

constructs2 主题

-

structured-programming1 主题

-

program-development-life-cycle2 主题

-

program-design-2 主题

-

program-testing-and-maintenance3 主题

-

user-defined-data-types1 主题

-

file-organisation-and-access-3 主题

-

floating-point-numbers-representation-and-manipulation3 主题

-

protocols2 主题

-

circuit-switching-packet-switching1 主题

-

processors-parallel-processing-and-virtual-machines5 主题

-

boolean-algebra-and-logic-circuits4 主题

-

purposes-of-an-operating-system-os3 主题

-

translation-software3 主题

-

encryption-encryption-protocols-and-digital-certificates3 主题

-

artificial-intelligence-ai4 主题

-

recursion1 主题

-

programming-paradigms4 主题

-

object-oriented-programming7 主题

-

file-processing-and-exception-handling2 主题

-

data-representation5 主题

-

multimedia3 主题

-

compression2 主题

-

networks-and-the-internet11 主题

algorithm-analysis

Suitability of algorithms

Why do we need to check the suitability of algorithms?

-

Algorithms must be compared to determine how suitable they are for solving a specific problem or working with a particular set of data

-

Key factors in comparing algorithms include:

-

How long they take to complete a task (time complexity)

-

How much memory they require to complete a task (space complexity)

-

-

Generally, the most suitable algorithm is the one that solves the problem in the shortest time and using the least memory

Time complexity

-

Time complexity refers to the number of operations or steps an algorithm takes to complete, not the actual time in seconds or minutes

-

It is important to understand that time complexity is independent of hardware performance

-

A faster CPU may execute instructions more quickly, but the number of instructions the algorithm performs remains the same

Analogy: Running a marathon

-

The marathon distance = the algorithm

-

The runners = different CPUs

-

Each runner finishes at a different time, but the distance (number of steps) is the same

Space complexity

-

Space complexity refers to the amount of memory an algorithm needs to complete its task

-

Memory usage includes:

-

Space for input data

-

Temporary variables

-

Any additional data structures (e.g. arrays, stacks, queues)

-

-

For example:

-

If an algorithm creates a new copy of the input data, it will require more memory than one that modifies the data in place

-

An algorithm that uses recursion may use more memory due to the call stack

-

Big O Notation

What is Big O Notation?

-

Big O Notation is a mathematical way to describe the time and space complexity of an algorithm

-

It shows how well an algorithm scales as the size of the input increases

-

It describes the order of growth (efficiency) of an algorithm

-

It is hardware-independent – it measures steps or operations, not seconds

-

Named after German mathematician Edmund Landau

-

Big O Rules:

-

Keep only the dominant term (largest exponent or growth rate)

-

Ignore constants – they become insignificant as input size grows

Types of time complexity

O(1) – Constant time

-

The algorithm always takes the same number of steps, regardless of input size

totalLength = len(s)

-

The length is returned in one step, even if the string is very long

O(n) – Linear time

-

The number of steps increases proportionally with the input size

n

function sumNumbers1(n) sum = 0 for i = 1 to n sum = sum + i next i return sum

endfunction-

For input size

n, the algorithm performsn + 1steps -

Example with multiple loops:

function loop x = input for i = 1 to x print(x) next i a = 0 for j = 1 to x a = a + 1 next j print(a) b = 1 for k = 1 to x b = b + 1 next k print(b)

endfunction-

Total steps =

3x + 5⇒ Still O(n) as x grows

O(n^2) – Polynomial time

-

Performance is proportional to the square of the input size

-

Caused by nested loops

function timesTable x = 12 for i = 1 to x for j = 1 to x print(i, " x ", j, " = ", i*j) next j next i for k = 1 to x print(k) next k print("Hello World!")

endfunction-

Steps:

x^2 + x + 2⇒ Dominant term isx^2⇒ O(n^2) -

As x increases, the impact of lower-order terms and constants becomes negligible

O(log n) – Logarithmic time

-

The number of steps grows slowly even as input size grows rapidly

-

Common in binary search

-

Example:

log2(8) = 3, because 2^3 = 8 -

Doubling input size barely affects total steps

-

|

x |

log2(x) |

|---|---|

|

1 |

0 |

|

8 |

3 |

|

1,024 |

10 |

|

1,048,576 |

20 |

|

1,073,741,824 |

30 |

-

Very efficient – useful for large datasets

O(2^n) – Exponential time

-

The number of steps doubles for every additional input value

-

Grows extremely fast

-

Very inefficient, but some problems (e.g. brute-force solutions) fall into this category

|

x |

2^x |

|

1 |

2 |

|

10 |

1,024 |

|

100 |

~10^30 |

-

Rare in practice unless necessary

O(n log n) – Linear logarithmic time

-

Algorithms that divide data and process each part

-

Example: Merge Sort

-

More efficient than O(n^2), commonly used in sorting

Best, average, and worst case

-

Every algorithm has different performance depending on input:

|

Case |

Description |

Example (Linear Search) |

|---|---|---|

|

Best case |

Most efficient scenario |

Item is first → O(1) |

|

Average case |

Typical input |

Item is in middle → O(n) |

|

Worst case |

Least efficient scenario |

Item not found → O(n) |

-

Big O notation usually describes the worst-case performance to guarantee a minimum standard

How to derive Big O from code

-

Count loops:

-

1 loop = O(n)

-

2 nested loops = O(n^2)

-

No loops = O(1)

-

-

Ignore constants and lower-order terms:

-

3n^2 + 4n + 5→ O(n^2)

-

Examples

function sumNumbers1(n) sum = 0 for i = 1 to n sum = sum + i next i return sum

endfunction-

One loop → O(n)

function sumNumbers2(n) sum = n * (n + 1) / 2 return sum

endfunction-

One statement → O(1)

function loop (3 loops, 5 statements) ⇒ O(n)

endfunctionfunction timesTable (nested loop + 1 loop + 1 statement) ⇒ O(n^2)

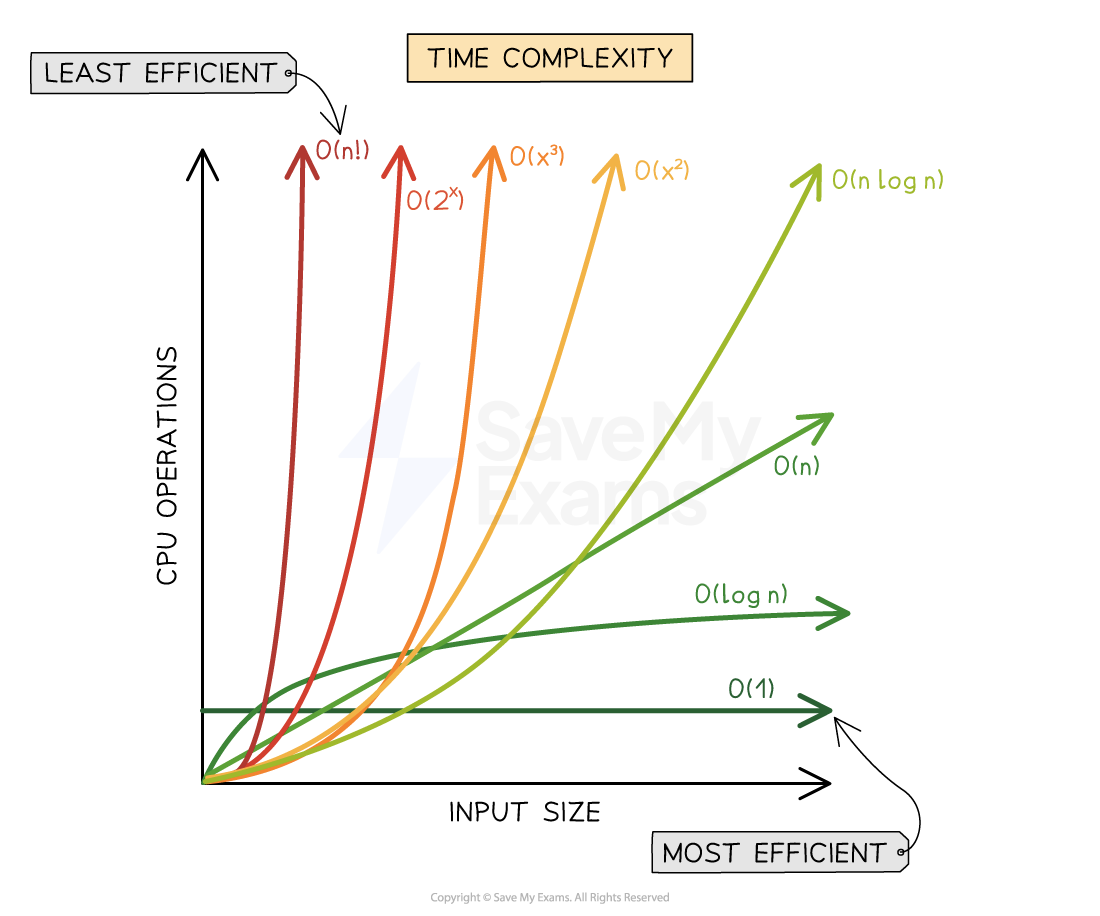

endfunctionTime complexity graph

-

From the graph, exponential time (2n) grows the quickest and therefore is the most inefficient form of algorithm, followed by polynomial time (n2), linear time (n), logarithmic time (logn) and finally constant time (1)

-

Further time complexities exist such as factorial time (n!) which performs worse than exponential time and linear-logarithmic time (nlogn) which sits between polynomial and linear time. Merge sort is an example of an algorithm that uses O(nlogn) time complexity

-

The Big-O time complexity of an algorithm can be derived by inspecting its component parts

-

It is important to know that when determining an algorithm’s Big-O that only the dominant factor is used.

-

This means the time complexity that contributes the most to the algorithm is used to describe the algorithm. For example:

-

3x2 + 4x + 5, which describes three nested loops, four standard loops and five statements is described as O(n2)

-

x2 is the dominating factor. As the input size grows x2 contributes proportionally more than the other factors

-

Coefficients are also removed from the Big-O notation. The 3 in 3x2 acts as a multiplicative value whose contribution becomes smaller and smaller as the size of the input increases.

-

For an arbitrarily large value such as 10100, which is 10 with one hundred 0’s after it, multiplying this number by 3 will have little contribution to the overall time complexity. 3 is therefore dropped from the overall time complexity

-

-

Responses