Computer-Science-A-level-Ocr

-

3-3-networks8 主题

-

3-2-databases7 主题

-

3-1-compression-encryption-and-hashing4 主题

-

2-5-object-oriented-languages7 主题

-

2-4-types-of-programming-language4 主题

-

2-3-software-development5 主题

-

2-2-applications-generation6 主题

-

2-1-systems-software8 主题

-

1-3-input-output-and-storage2 主题

-

1-2-types-of-processor3 主题

-

1-1-structure-and-function-of-the-processor1 主题

-

structuring-your-responses3 主题

-

the-exam-papers2 主题

-

8-2-algorithms-for-the-main-data-structures4 主题

-

8-1-algorithms10 主题

-

7-2-computational-methods11 主题

-

7-1-programming-techniques14 主题

-

capturing-selecting-managing-and-exchanging-data

-

entity-relationship-diagrams

-

data-normalisation

-

relational-databases

-

hashing

-

symmetric-vs-asymmetric-encryption

-

run-length-encoding-and-dictionary-coding

-

lossy-and-lossless-compression

-

polymorphism-oop

-

encapsulation-oop

-

inheritance-oop

-

attributes-oop

-

methods-oop

-

objects-oop

-

capturing-selecting-managing-and-exchanging-data

-

6-5-thinking-concurrently2 主题

-

6-4-thinking-logically2 主题

-

6-3-thinking-procedurally3 主题

-

6-2-thinking-ahead1 主题

-

6-1-thinking-abstractly3 主题

-

5-2-moral-and-ethical-issues9 主题

-

5-1-computing-related-legislation4 主题

-

4-3-boolean-algebra5 主题

-

4-2-data-structures10 主题

-

4-1-data-types9 主题

-

3-4-web-technologies16 主题

-

environmental-effects

-

automated-decision-making

-

computers-in-the-workforce

-

layout-colour-paradigms-and-character-sets

-

piracy-and-offensive-communications

-

analysing-personal-information

-

monitoring-behaviour

-

censorship-and-the-internet

-

artificial-intelligence

-

the-regulation-of-investigatory-powers-act-2000

-

the-copyright-design-and-patents-act-1988

-

the-computer-misuse-act-1990

-

the-data-protection-act-1998

-

adder-circuits

-

flip-flop-circuits

-

simplifying-boolean-algebra

-

environmental-effects

stages-of-compilation

Stages of Compilation

What is compilation?

-

Compilation is a process that translates a program written in a high-level programming language into machine code

-

Only machine code can be executed by a computer

-

There are four stages involved in this process:

-

Lexical Analysis

-

Syntax Analysis

-

Code Generation

-

Optimisation

-

Lexical analysis

-

In A Level Computer Science, lexical analysis means studying the words or vocabulary of a language

-

This stage involves identifying lexical ‘tokens’ in the code

-

Tokens represent small meaningful units in the programming language, such as:

-

Keywords

-

var, const, function, for, while, if

-

-

Identifiers

-

Variable names, function names

-

-

Operators

-

‘+’, ‘++’, ‘-‘, ‘*’

-

-

Separators

-

‘,’ ‘;’, ‘{‘, ‘}’, ‘(‘, ‘)’

-

-

-

During this stage, unnecessary elements like comments and whitespace are ignored

-

For example, if the following code is being compiled:

var x = function(x,y) {

if(x>2) {

return x*y;

}

return x+y;

}

-

The result of lexical analysis is a token table

|

|

Token |

Type |

|---|---|---|

|

1 |

var |

Keyword |

|

2 |

x |

Identifier |

|

3 |

= |

Operator |

|

4 |

function |

Keyword |

|

5 |

( |

Separator |

|

6 |

x |

Identifier |

|

7 |

, |

Separators |

|

8 |

y |

Identifier |

|

9 |

) |

Separator |

|

10 |

{ |

Separator |

|

11 |

return |

Keyword |

|

12 |

x |

Identifier |

|

13 |

* |

Operator |

|

14 |

y |

Identifier |

|

15 |

; |

Separator |

|

16 |

} |

Separator |

Syntax analysis

-

Now that tokens have been identified, syntax analysis makes sure they all adhere to the syntax rules of the programming language

-

A symbol, e.g. ‘$’ could be a valid token but not a valid character according to particular programming languages

-

The dollar symbol would be flagged as breaking the syntax rules

-

Other syntax errors programmers commonly make include mismatched parentheses or missing semicolons

-

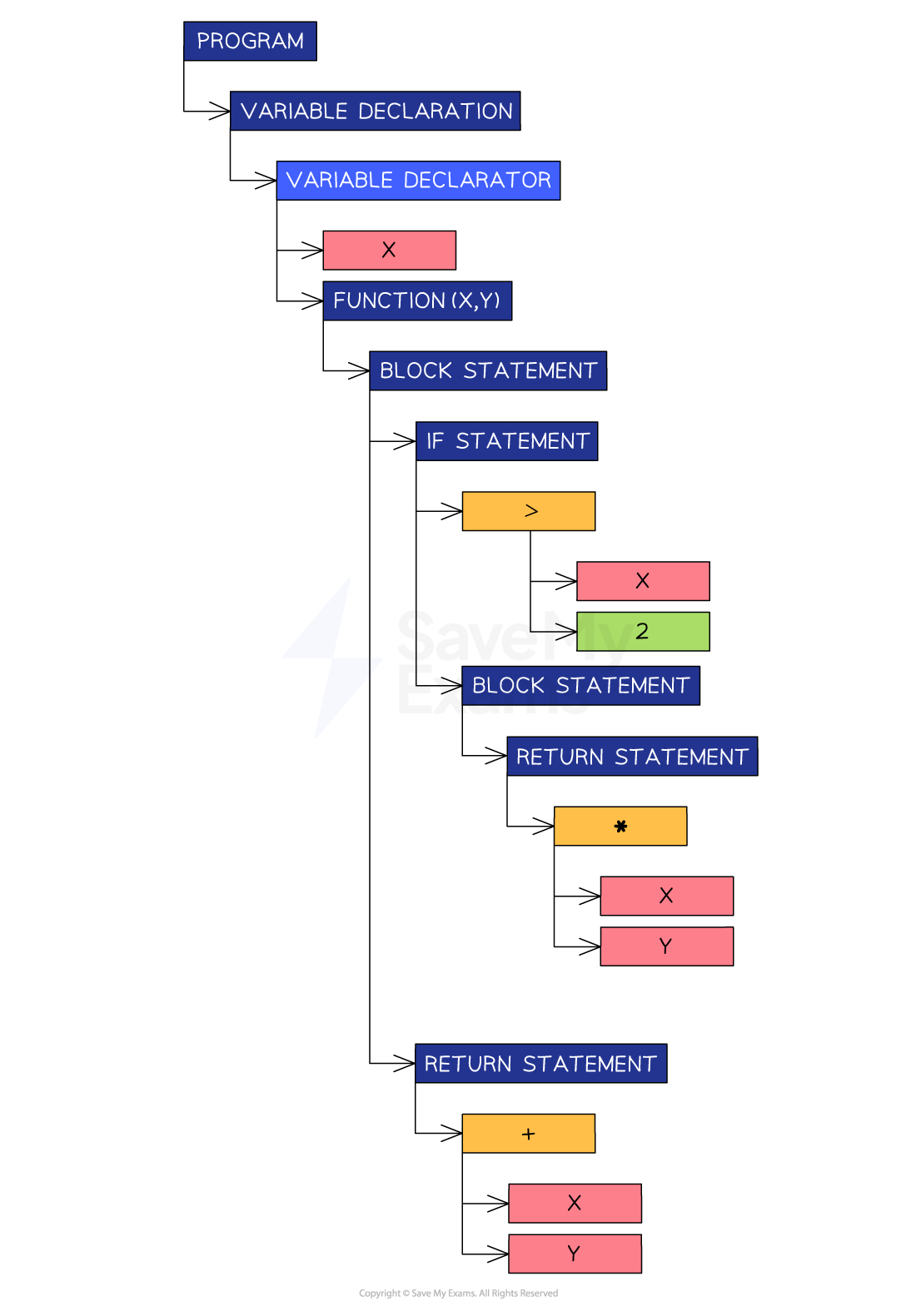

If the code passes the syntax analysis, the compiler can create an Abstract Syntax Tree (AST)

-

An AST is a graph-based representation of the code being compiled

-

An AST is an efficient way to represent the code for the next step

Example abstract syntax tree

-

For the same code as above, the following abstract syntax tree can be created

Abstract syntax tree

Code generation

-

This step takes the AST and traverses it to generate object code that can be executed by the computer

Optimisation

-

This step modifies the code to make it more efficient without changing its functionality

-

This is important to attempt because it reduces the memory required to run the code, which leads to faster execution

-

A common optimisation action is removing duplicate code

-

If an ‘add’ function is written twice in the source code, a sophisticated compiler will notice this and include it only once in the object code

Worked Example

Imogen is writing application software for her company and is ready to compile it.

const celsius = (fahrenheit) => { return (5/9) * (fahrenheit-32);

}Referring to the example above, explain what happens during Lexical Analysis.

[2]

How to answer this question:

-

Recall the purpose of lexical analysis and what it aims to produce

-

Recall the different types of tokens that can be identified in the code

-

Use examples from the code block to write your answer

Answer:

Answer that gets full marks:

‘Lexical analysis’ breaks the code into tokens, ignoring whitespace and comments. Tokens are identified by their type:

-

keyword: ‘return’

-

operator: ‘*’, ‘-‘

-

identifier: celsius, fahrenheit

-

delimiter: ‘;’, ‘(‘, ‘)’ etc

When all tokens have been identified, a token table is produced for the next step in the compilation.

Acceptable answers you could have given instead:

The compiler will look at all the lexical tokens in the code e.g. ‘fahrenheit’ is an identifier, ‘return’ is a keyword. All the tokens are placed into a tokens table for the next step.

Worked Example

State the name of the stage of compilation that directly follows Lexical Analysis.

[1]

Answer:

Answer that gets full marks:

Syntax analysis.

Responses